A new reinforcement learning model of the Iowa Gambling Task

Abstract

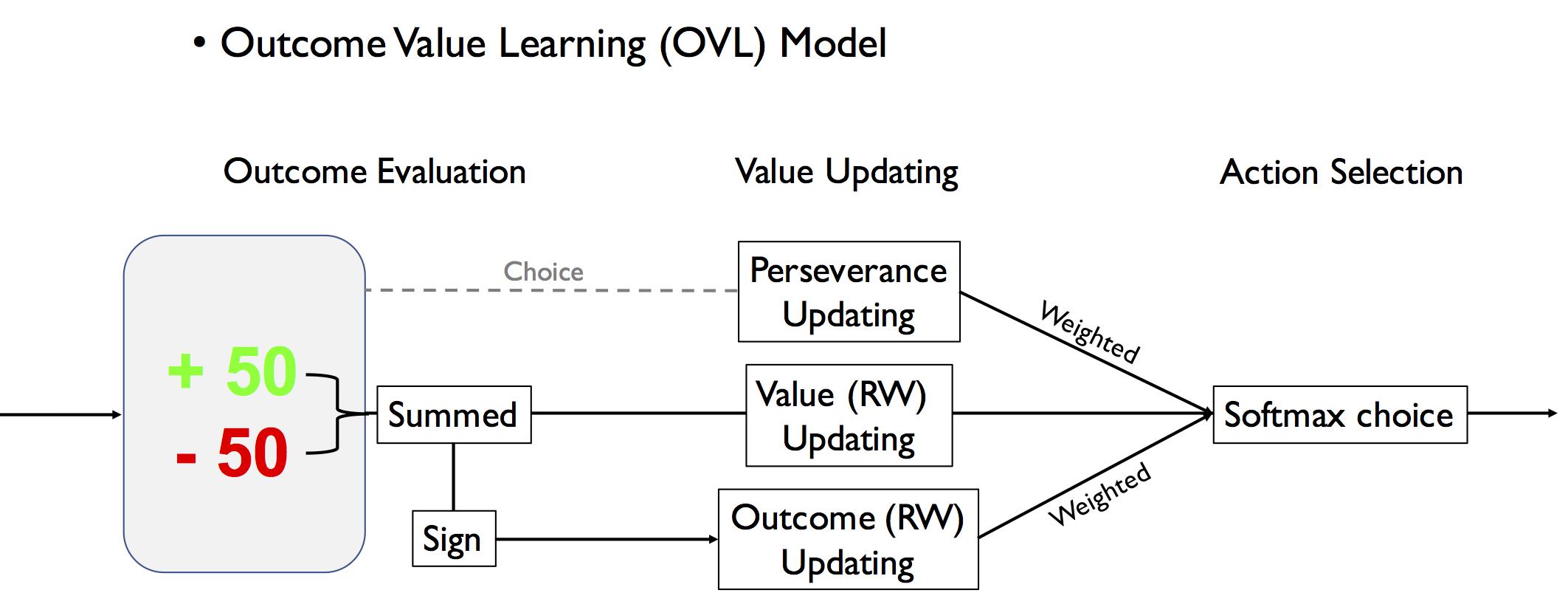

The Iowa Gambling Task (IGT) is widely used to study decision making across a variety of different populations. However, the complexity of the IGT makes it difficult to attribute variation in performance to specific cognitive processes. Many cognitive models have been proposed for the IGT in an effort to solve this problem, but currently no single model shows optimal short- and long-term prediction accuracy (Ahn, Busemeyer, Wagenmakers, & Stout, 2008; Ahn et al., 2014). A review of behavioral performance on the IGT reveals three important factors that strongly influence decision making: 1) gain-loss frequency, 2) perseverance, and 3) reversal-learning. Here, we propose a new model, the Outcome Value Learning (OVL) model, that explicitly models each of these factors and shows short- and long-term prediction accuracy that provides the best compromise between competing models. Finally, an application of the OVL to IGT data collected from drug using populations reveals distinct patterns of decision making which are reflected in model parameters.